Introduction to Neural Networks — Perceptrons

HISTORY (just a little bit)

Perceptrons are a type of artificial neuron that uses the Heaviside step function as it’s activation function.

Scientists Warren McCulloch and Walter Pitts invented the perceptron in 1943.

The first hardware (Mark I perceptron) for perceptrons was developed in the 1950s and 1960s by the scientist Frank Rosenblatt.

Enough History !!

Let’s get down to the mathematics…

MATH

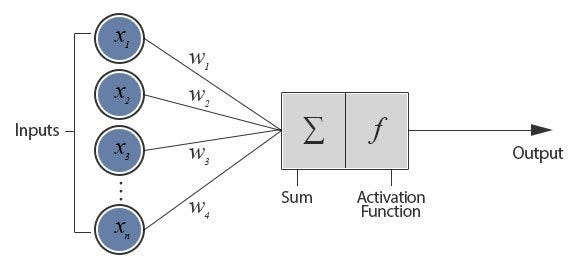

A perceptron takes several binary inputs, x1, x2, . . , xn, and produces a single binary output.

Here, it has taken 3 inputs, but in general it can take fewer or more inputs.

Rosenblatt proposed a simple rule to compute the output. He did what is called ‘biasing’. He introduced weights, w1, w2,..., wn, real numbers expressing the importance of the respective inputs to the output.

Clearly, the neuron’s output, 0 or 1, was determined by whether the weighted sum was less than or greater than some threshold value, a real number.

We can think of the perceptron as a device that makes decisions by weighing up evidence (literally).

A larger value of w indicates that the parameter matters more to the person compared to other parameters.

Just by varying the weights and the threshold, we can get different models of decision-making.

Clearly, the perceptron isn’t a complete model of human decision-making! But we observe how a perceptron can weigh up different kinds of evidence in order to make decisions.

Arguably, a complex network of perceptrons could make quite subtle decisions.

Let’s interpret the perceptron provided above:

The first column of perceptrons is making three very simple decisions by weighing the input evidence.

The second column of perceptrons is making a decision by weighing up the results from the first layer of decision-making. (pretty much like the chain reaction game). Understandably, the perceptrons in the second layer can make a decision at a more complex and abstract level than the perceptrons in the first layer.

More complex decisions can be made by the perceptron in the third layer. In this way, a many-layer network of perceptrons can engage in sophisticated decision-making.

MORE MATH

Now, some of you may have noticed an anomaly.

Initially, I said perceptrons provide a single output, but in the above diagram, it seems they may have many.

Actually, they’re still the same output. The multiple output arrows are merely a useful way of indicating that the output from a perceptron is being used as the input for several other perceptrons.

The mathematical notation of summation can be cumbersome, so we make some changes to simplify it.

First, we write the summation as a dot product, (w.x), where w and x are the vectors whose components are the weights and inputs, respectively.

Secondly, we change the threshold to the other side of the inequality and replace it by what’s known as the perceptron’s bias, b = (-1)*threshold.

The perceptron now looks like:

We can think of the bias as a measure of how easy it is to get the perceptron to output a 1.

So, perceptrons are a method for weighing evidence to make decisions.

BOOLEAN ALGEBRA

Another use of perceptrons is in computing elementary logical functions such as AND, OR, and NAND.

A smart move would be to implement the NAND gate. Electronics guys will know that NAND is a universal gate, which means any logical function can be implemented using NAND gates.

NAND GATE

Suppose we have a perceptron with two inputs, each with weights of (-2) and an overall bias of 3.

Taking input 00 produces output 1, since (-2)*0 + (-2)*0 + 3 = 3 is positive.

Similarly, inputs of 01 and 10 provide output 1.

Providing an input of 11, though, produces an output of 0. [(-2) + (-2) + 3 = -1 (negative)]

Clearly, we have a NAND gate implemented using a perceptron.

Now, we can create a circuit using these NAND gates to add two bits, x1 and x2.

This requires computing the bitwise sum = x1 XOR x2, and the carry bit x1 AND x2.

We replace all the NAND gates with perceptrons with two inputs, each with a weight of -2 and an overall bias of 3.

The computational universality of perceptrons is reassuring, as it tells us that networks of perceptrons can be as powerful as any other computing device.

It turns out that we can devise learning algorithms that can automatically tune the weights and biases of a network of artificial neurons.

Sounds cool, right?

This tuning happens in response to external stimuli, without direct intervention by a programmer. These learning algorithms enable us to use artificial neurons in a way radically different from conventional logic gates.

Instead of explicitly laying out a circuit of NAND and other gates, our neural networks can simply learn to solve problems.

Let’s connect and build a project together: 🐈⬛